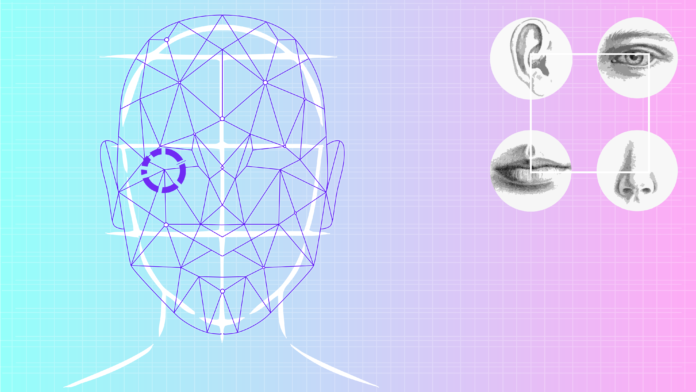

If you rode the metro in the Brazilian city of Sao Paulo in 2018, you might have come across a new kind of advertising. Glowing interactive doors featured content targeted at individuals, according to assumptions made by artificial intelligence based on their appearance. Fitted with facial recognition cameras, the screens made instantaneous decisions about passengers’ gender, age and emotional state, then served them ads accordingly.

Digital rights groups said the technology violated the rights of trans and non-binary people because it assigned gender to individuals based on the physical shape of their face, potentially making incorrect judgments as to their identity. It also maintained a strictly male-female model of gender, ignoring the existence of non-binary people.

“If these systems cannot conceptualize that you exist, then you are essentially living in a space that is constantly misgendering you and informing you that you’re not real,” said Os Keyes, an AI researcher at the University of Washington.

Keyes, who is British, is part of a campaign by privacy and LGBTQ+ advocates to convince the European Commission to ban automated gender recognition technology. The EU is planning to reveal a new proposal on the regulation of artificial intelligence this month. Campaigners are hoping it will outlaw tech that makes assumptions about gender and sexual orientation.

“We want to carve out a path in artificial intelligence that’s not the Chinese or the U.S. laissez-faire one, but one that’s based on human rights,” said Daniel Leufer, a Europe Policy analyst at the digital rights group Access Now.

Automated gender recognition tech is already an everyday reality. One example is Giggle, a women-only social media app that first made headlines in 2020. Giggle uses facial recognition AI to “verify” users’ gender, by analyzing a selfie submitted by applicants.

“It’s science!” the app’s pink-hued website cheerfully stated, before admitting that “due to the gender-verification software that Giggle uses, trans-girls will experience trouble with being verified.” Users and the media criticized the app as trans-exclusionary and based on outdated ideas of what women are supposed to look like. The app’s website has since changed, and does not mention how trans women can join.

Giggle’s CEO Sall Grover told me that the app was moderated by humans who “watch behind the screens at all times to ensure that Giggle remains a female space,” adding that “there are apps specifically for gay men or specifically for transgender people and we support them.”

Grover said that “trans-identified females are welcome on the Giggle platform, if they choose,” but when asked to specify how trans women should navigate the gender recognition software, she simply responded, “Giggle is an app for females.” Just before this piece was published Grover said, in a tweet, that the app was ‘strictly for biological females’.”

Automated gender recognition is yet another tool, activists say, to reinforce fixed notions of gender and the stereotypes and biases that accompany them. The technology itself “works very badly for trans people and it doesn’t work at all for non-binary people,” said Yuri Guaiana of All Out, an international LGBTQ+ Advocacy group that is campaigning for the ban.

Advertising revenue is often an incentive for companies to want to assign gender to users. This month, Access Now sent a letter to the music streaming platform Spotify, criticizing it for technology it developed to detect a user’s gender by analyzing the way they speak, in order to make music recommendations.

“This advanced technology, that we’re told is going to revolutionize society, is actually kind of cementing conservative, outdated social hierarchies,” said Leufer.

Spotify did not respond to a request for comment.

Activists are concerned that if the technology is not outlawed, it will soon become ubiquitous. There is also a worry that in authoritarian countries, where LGBTQ+ people are criminalized, it could be implemented to “out” vulnerable individuals.

“Frankly, all the trans people I know, myself included, have enough problems with people misgendering us, without robots getting in on the fun,” said Keyes.

The story you just read is a small piece of a complex and an ever-changing storyline we are following as part of our coverage. Now we want to hear from you, our readers. Please take a moment to fill out this survey and tell us what matters most to you. We are about to launch a new membership program at Coda Story, and getting to know your news needs will help us make it better!